Affine Tuning: Embodied Interaction and Dynamic Composition

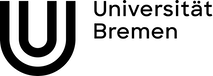

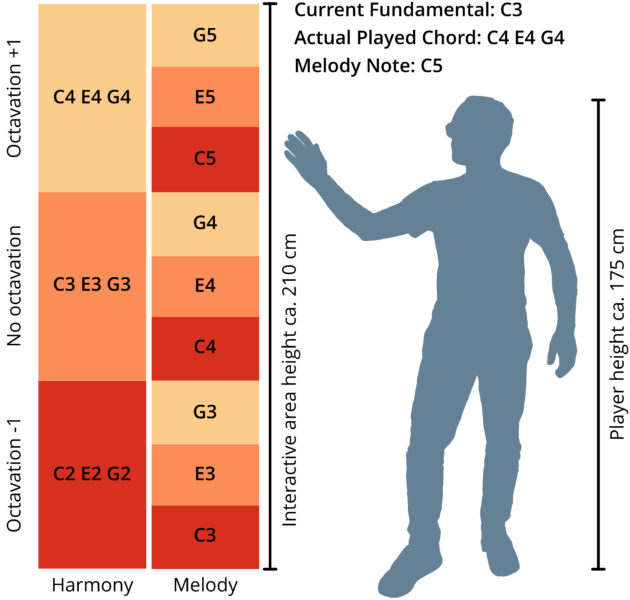

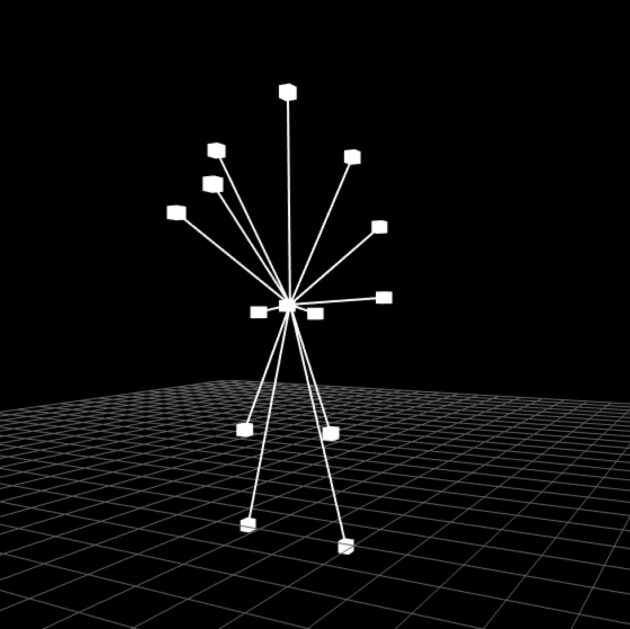

Affine Tuning is an iPhone app which creates a bidirectional connection between dance performance and music. Players can interact with musical pieces through their body movement and posture, in a place of their comfort. Their performance is captured by the phone camera, analyzed locally by pose estimation software, and translated to musical feedback using custom rule systems. All feedback is audio only.

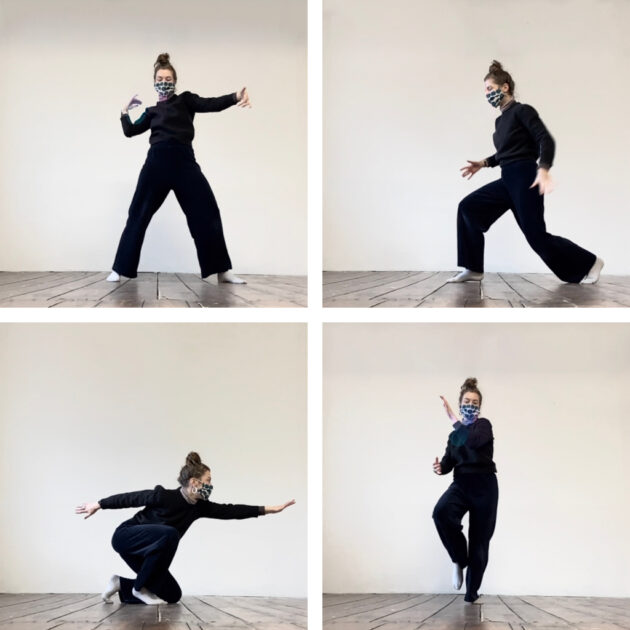

The music in Affine Tuning is composed beforehand as blueprints, which are interpreted ad hoc, according to the players input. For example, following a given tempo and harmony, the current notes are played based on the vertical position of certain body parts. Additionally, the intensity of movement influences the notes’ velocity.

Listen here to an audio capture from a performance of the introduction track “Just Getting Up”, based on “Pachelbel’s Canon”.

Besides this core mechanism, there are a number of interactive virtual instruments and audio effects available that can be connected to all kinds of parameters, like body rotation, expansion/contraction, and more. The parameters of the input-to-music translation are not put on-top of the music but add another dimension of expression for the composer. They area extended and customized with every new composition.

The music in Affine Tuning are original pieces. The composition developed during the thesis is called Nefrin, with more to follow. The app will be released in the iOS app store in April 2021.

The full project documentation is available here.